Blog

Trustworthy AI: How enterprises can move beyond pilots to scalable, reliable AI

Enterprises struggle to scale AI due to a lack of trust. Success requires regulatory alignment, strong data quality, and treating AI as a continuously evolving product. POCs often fail due to unclear goals, vague metrics, and weak risk controls, so executives must define ownership, measurable outcomes, safety requirements, and paths to production upfront. Trustworthy AI ties KPIs to real financial impact, enforces governance frameworks, and follows a structured maturity model with stage-gates for compliance and accountability. With disciplined processes, outcome-first thinking, and robust governance, organizations can move beyond pilots and deploy AI reliably and safely at scale.

When generative AI burst onto the scene, everyone sat back and looked at it in amazement. Soon, the euphoria over AI was replaced with skepticism. The lack of trust in AI systems has meant enterprises struggle to move AI from pilot projects into meaningful production. According to McKinsey, fewer than 10 percent of AI initiatives deliver sustainable business impact at scale.

This begs the question: What went wrong and continues to go wrong? It cannot be lack of technology because models are more powerful than ever. The real challenge is a trust deficit. People lack the trust that AI systems are reliable, governed, safe, and aligned with business goals. And without trust, pilots remain experiments, incapable of driving efficiency, accuracy, and innovation leaders expect.

This blog discusses ways to inspire confidence and trust in AI systems and how enterprises can move beyond pilots to scalable, reliable AI.

From Pilots to Production: What Changes Now

It takes as much discipline as it takes technology to move from AI demos to enterprise-grade production. In pilots, teams can afford flexibility. However, production requires rigor.

Three changes matter most.

1. Regulation: Regulation cannot be an afterthought. It is real and indispensable to your success. U.S. regulators are moving faster than many executives realize. The White House’s AI Bill of Rights (2022) focuses on transparency, fairness, and accountability. The National Institute of Standards and Technology (NIST) has issued its AI Risk Management Framework to guide organizations in assessing and managing risks. Enterprises that treat regulation as an afterthought will face delays and reputational risks.

2. Going beyond model choice. Enterprises have invested billions of dollars in choosing the ‘best’ AI models. Best is an adjective, and its definition can change from enterprise to enterprise, boardroom to boardroom. Data is not subjective. Enterprises often overinvest in choosing the ‘best’ model while underinvesting in data quality, monitoring, and retraining practices. Gartner notes that 80 percent of AI project time is spent on data preparation and governance rather than model building. That investment is essential for scaling.

3. Treating AI like a product, not just a project. What is the difference between a project and a product? A project ends when it is delivered. A product evolves, adapts, and receives continuous support. Enterprises must apply product-management disciplines to AI: feature roadmaps, post-deployment service level objectives (SLOs), and long-term ownership. Without this mindset, pilots succeed technically but collapse in operations.

The POC Trap: Unclear Goals and Metrics

There is no denying the fact that Proofs of Concept (POCs) are valuable for exploration. However, they can also become traps. Research by Capgemini found that scaling AI is extremely challenging for enterprises: Only 13 percent of organizations move AI use cases into industrialized scale. The primary reasons are unclear goals, weak metrics, and inadequate risk controls.

Executives must ensure POCs answer four written questions before they begin:

- Who owns the business outcome? Assign a budget holder, not just a technical sponsor.

- What is the unit of value? Define whether success is measured in hours saved, dollars earned, or revenue protected. Vague success metrics won’t get you anywhere. Measurable outcomes give you a clear idea of your trajectory.

- What is the path to production? Identify data pipelines, rollout processes, and support models before launching the POC.

- How will safety be guaranteed? Address privacy, bias, and security risks in writing. These challenges must be addressed with all the seriousness they demand lest you want to tie yourself in knots.

Define Success Upfront: Measurable Outcomes and Accountability

Trustworthy AI requires measurable, accountable success. Accuracy metrics mean little in themselves. A model with 95 percent accuracy has no meaning if it does not improve financial outcomes.

Executives must tie AI KPIs to profit-and-loss (P&L) impact. For example:

- A customer service AI should reduce average handle time or increase net promoter score.

- A fraud-detection system should lower fraud losses within a given time.

Success should also be timebound and benchmarked against baselines. A target might read: “Reduce invoice reconciliation cycle time from 15 days to 7 within 90 days of deployment.”

Ownership is another critical element. Every initiative should have three accountable leaders: a business sponsor, a technical directly responsible individual (DRI), and a risk DRI. These roles ensure business outcomes, technical feasibility, and compliance remain aligned.

Furthermore, enterprises must plan for post-deployment. Service Level Objectives (SLOs) should include acceptable downtime, response times, and retraining schedules. Trust is built not in launch week but in the months and years that follow.

Outcome-First Path to Scale

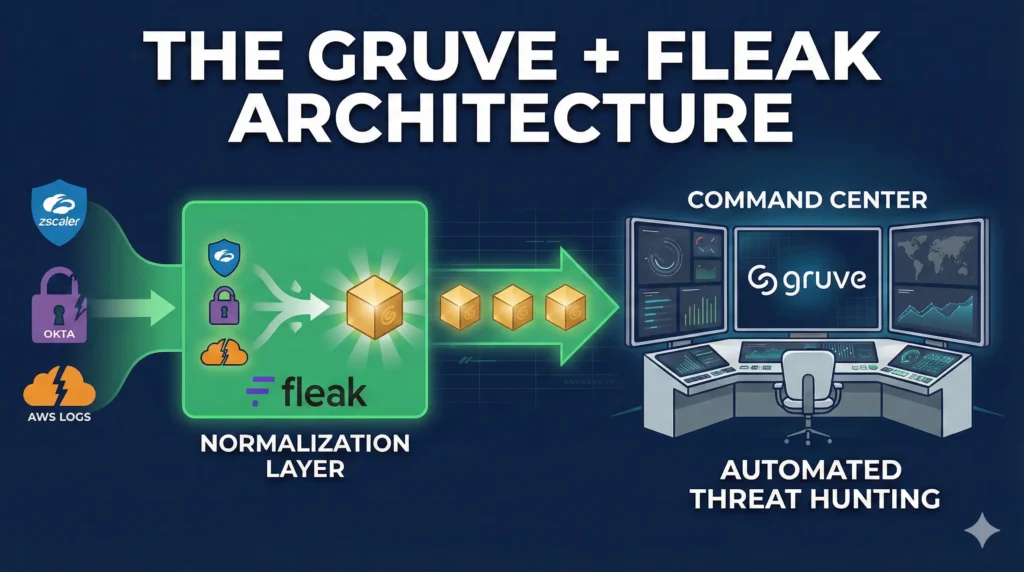

Trust is a lifelong process – a process that is built on outcomes and not empty talks. Target outcomes to build trust. As an example: Gruve’s Quoting Agent drives ROI by dramatically reducing the time spent generating, approving, and correcting quotes.

On average, organizations see a 60%+ reduction in quoting time, freeing up their revenue and finance teams to focus on closing deals instead of admin work. By minimizing manual entry and errors, the agent helps shorten the sales cycle. Faster, more accurate quotes directly improve win rates, accelerate revenue recognition, and provide time savings.

Sellers who previously spent ~10 hours per week on quoting now save over 400 hours per year. At an average seller cost of $50/hr, that’s a $20K annual time savings value per seller.

Minimal Viable Flow (60–90 days).

A trusted scale path includes:

- Mapping existing processes and establishing baselines.

- Plumbing the data and ensuring clean, labeled records.

- Deploying intelligent document capture and rules.

- Validating with users, addressing exceptions, and expanding incrementally.

Example KPI Dashboard.

Enterprises should monitor:

- Average cycle time per invoice.

- Touchless processing rate.

- Exception rate.

- Duplicate detection and prevention.

- Hours saved from rework.

The Gruve AI Maturity Model

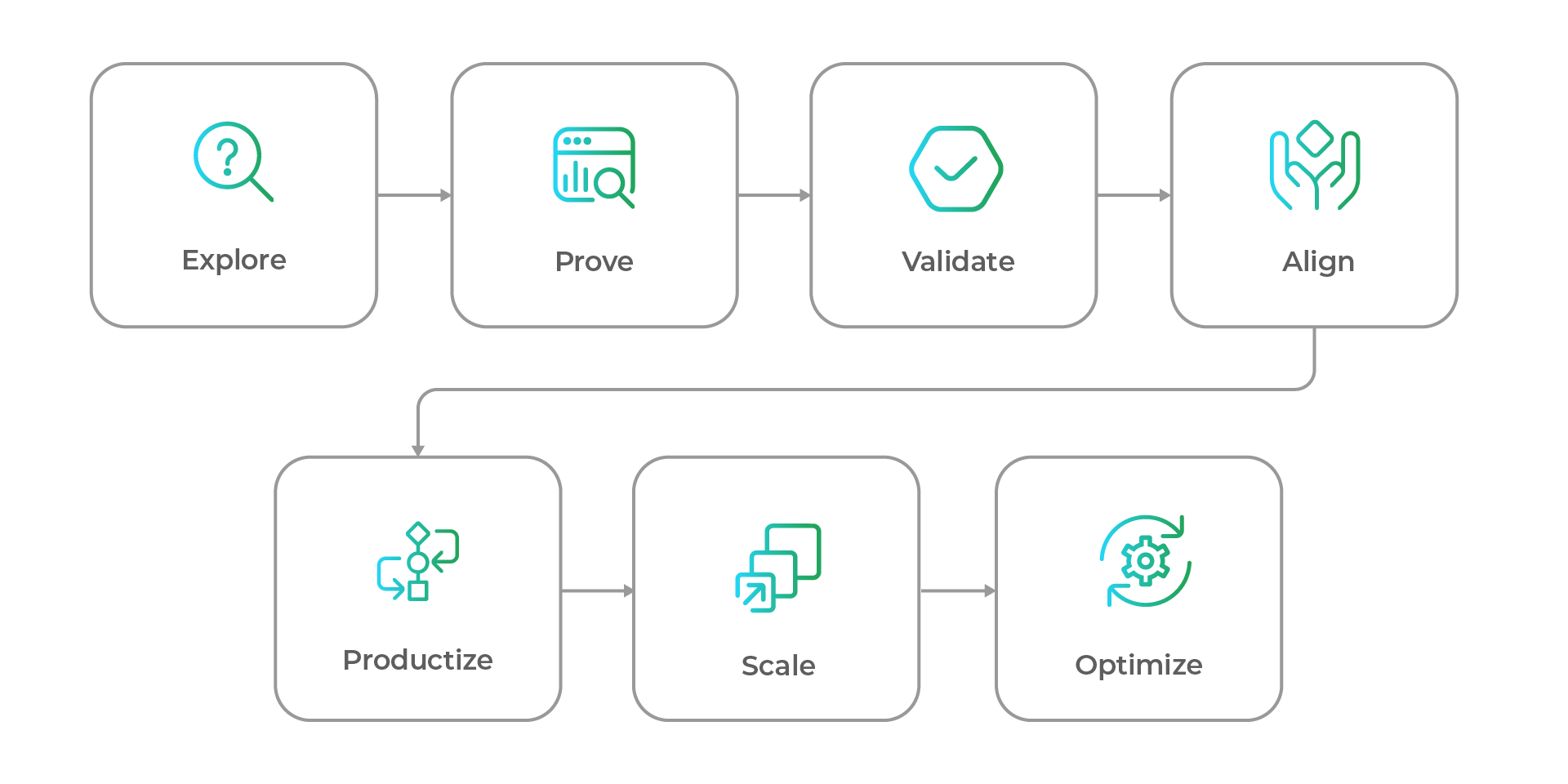

Gruve applies a structured maturity path to avoid POC drift:

- Explore: Identify use cases with measurable business outcomes.

- Prove: Run timeboxed pilots with defined KPIs.

- Validate: Test early with real users to ensure solution matches actual needs.

- Align: Engage cross-functional stakeholders to surface constraints and build buy-in.

- Productize: Establish data pipelines, ownership, and SLOs.

- Scale: Deploy across business units with governance guardrails.

- Optimize: Continuously improve with feedback loops and monitoring.

Stage-gates at each step—complete with documented KPIs, compliance checks, and accountability sign-offs—ensure initiatives remain on track. This disciplined path builds organizational trust.

Governance Essentials You Shouldn’t Skip

We have highlighted it on many occasions, but it is worth reiterating that technical excellence and performance alone cannot inspire trust in AI. Trust in AI comes from governance. Executives should implement three essentials:

- Policy baseline. Draft and enforce AI usage policies that reflect organizational values and regulatory requirements. Policies should be unambiguous and clearly highlight acceptable use, risk thresholds, and escalation procedures.

- Management system. Standards such as ISO/IEC 42001 and NIST Artificial Intelligence Risk Management Framework provide frameworks for AI management. Though they are not mandatory, they help enterprises systematize risk assessments, bias reviews, and monitoring.

- Regulatory readiness. U.S. federal and state regulators are shaping AI oversight quickly. Preparing now—by documenting model lineage, data sources, and governance practices—will help avoid costly delays later.

Governance is the foundation for trust. When boards, regulators, and customers see that AI is governed responsibly, confidence grows.

Conclusion

AI is no longer a question of capability. Enterprises have the tools, models, and data. The real question is whether they can be trusted to deliver reliable, safe, and measurable outcomes at scale. Pilots are easy; production is hard. However, production is where value is created.

By defining outcomes, assigning accountability, following structured maturity models, and embedding governance, executives can turn AI from experiments into engines of growth, unlocking the true speed their organization can scale Trust is built through accurate choices and disciplined processes. It is not a question of chance but making the right decisions at the right time to inspire trust and confidence.

For leaders who want to move beyond pilots, the path is clear. The time to act is now. Invest in trustworthy AI strategies, and your organization will not just adopt AI—you will scale it, govern it, and lead with it.

Ready to scale your AI with confidence? Learn how to build reliable, governed, and measurable AI systems that deliver real value.