Artificial intelligence is transforming how organizations work, think, and protect themselves. However, the evolution of AI also comes with risks. Recent studies reveal that AI is changing security forever.

This blog discusses how AI is no longer deterministic, why security must become part of the infrastructure, and how enterprises can respond to this rapid transformation. It also offers a practical roadmap for businesses navigating a world where AI can generate attacks as fast as it can detect them.

The New Reality: AI Is No Longer Deterministic

AI has evolved beyond predictable systems and is no longer deterministic. Identical inputs always produced identical results in traditional computing. That is no longer the case. With generative AI, the same prompt can give different outputs depending on the data set, the context, or even the model version.

This non-deterministic behavior makes AI less predictable and harder to secure. A model’s responses can evolve over time, altering the outcomes users receive. This unpredictability can introduce errors, biases, or even vulnerabilities that attackers might exploit.

To manage unpredictability in AI’s response, enterprises must:

- Continuously monitor AI models to ensure input-output integrity.

- Validate responses dynamically, not statically.

- Detect harmful or inaccurate outputs early through automated checks.

It is crucial to monitor AI models continually to ensure input-output integrity, marking a shift in mindset from protecting static systems to securing evolving, context-driven intelligence.

Security as Infrastructure: The Foundation of AI Safety

Earlier, cybersecurity was often an afterthought. That framework AI now demands security as an infrastructure.

The AI ecosystem spans applications, databases, and networks. Every data packet must be protected because it is non-negotiable for security to be embedded from the start of every AI deployment.

Security as Infrastructure demands:

- Integrating security by design at the data center and cloud level.

- Applying zero trust principles from the software to the network to the edge.

- Securing both data in motion and data at rest to ensure end-to-end resilience.

It is worth remembering that adding security post-deployment can disrupt operations and face resistance from IT and business teams. If security becomes an afterthought, it’s hard to put in after the data center is built.

This shift means treating cybersecurity as the foundation of the architecture and not just a layer.

Protecting End Users and Sensitive Data

New risks are emerging every day as employees adopt generative AI tools like ChatGPT or Perplexity. Without proper safeguards, users may unintentionally expose proprietary data or receive biased or harmful outputs. For example, uploading configuration files, statements of work, or IP addresses into public AI models could leak critical information.

It is indispensable for an organization to protect its confidential information and its end users. Failure to protect confidential information can lead to major financial losses, while neglecting end-user safety can invite regulatory penalties.

The following steps help ensure AI tools enhance productivity without compromising enterprise integrity.

- Implement data loss prevention (DLP) solutions to stop sensitive uploads.

- Monitor prompt activity for bias, toxicity, or data leakage.

- Restrict access to models based on employee roles and security clearance.

The Urgency of Model Validation and Runtime Security

AI models, unlike traditional software, lack a common vulnerabilities database. Malicious actors can send cleverly created prompts that bypass guardrails, leading to harmful, biased, or unintended outputs. In the absence of a common vulnerability database in AI models, you cannot rely totally on guardrails built into the software.

The lack of a common vulnerability database in AI models makes it essential for organizations to build their own validation frameworks.

Organizations must do the following to overcome the challenges of AI models lacking a common vulnerability database:

- Run continuous model validation to confirm safe and accurate outputs.

- Deploy dynamic AI runtime security, or a firewall for AI, to monitor inputs and outputs in real time.

- Flag and block sensitive or malicious prompts before they reach users.

These evolving defenses are like having a firewall sit in front of your AI application to capture all prompts and decide whether to allow or block them.

As attackers increasingly use AI to automate and accelerate attacks, runtime defenses become essential.

The Rise of AI-Powered Attacks

AI is transforming cybersecurity on both sides of the battlefield. Attackers now use AI to generate and deploy exploits faster than ever. They can now do harm in minutes or hours what once took weeks or months

Traditional defenses can’t keep pace with the speed and complexity of AI-driven threats. It is important to adopt AI-powered security solutions capable of learning and responding automatically.

Effective defenses include:

- AI and machine learning integrated into security tools to detect and adapt in real time.

- Security information and event management (SIEM) systems with AI-driven correlation.

- Automated threat hunting that identifies and closes vulnerabilities before attackers act.

Today, it is crucial for organizations to have solutions that use AI itself so they can adapt quickly and stop increasingly sophisticated cyberattacks.

The future of cybersecurity lies in AI defending against AI. It will not be an exaggeration to call it an AI race where automation, speed, and intelligence define survival.

Building Collaborative and Skilled Security Teams

Technology alone cannot solve AI-era security challenges. People and processes are equally critical. The age of AI demands cross-functional expertise that bridges network, data, and AI knowledge.

Effective protection begins with discovery and understanding where AI is being used, how models are deployed, and what data they access.

Security engineers must now:

- Understand AI infrastructure, not just networks or firewalls.

- Collaborate with developers and data scientists to align policies.

- Develop shared accountability for AI systems and their behavior.

It is essential for security engineers to understand not only networks but also AI, data, and software components. This multidisciplinary approach is essential for sustainable AI governance.

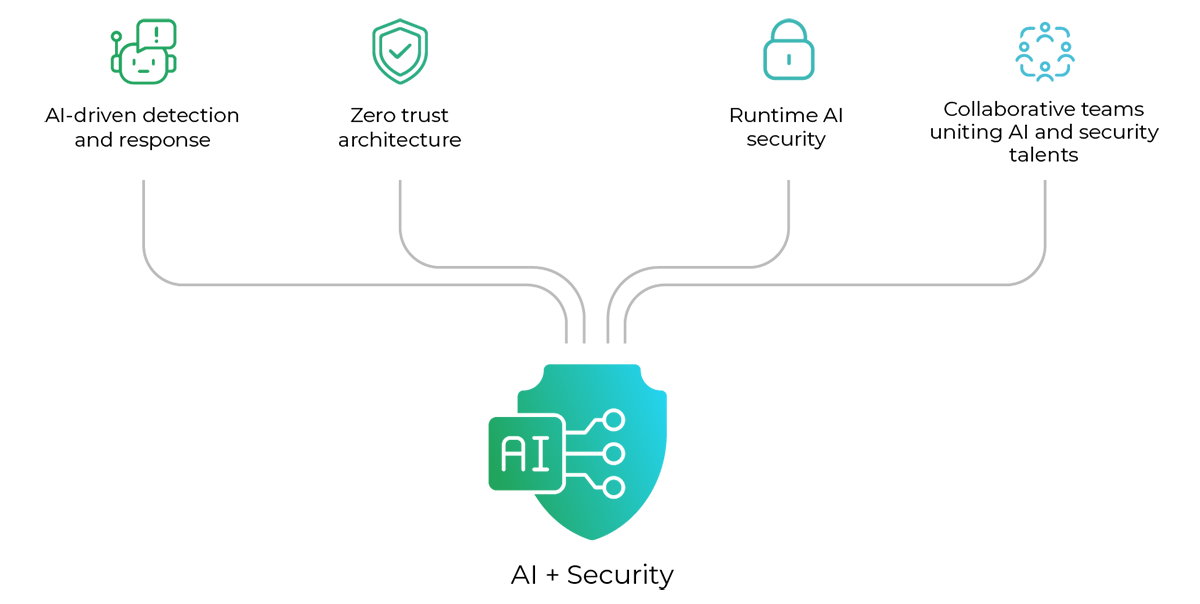

Gruve’s Approach: Embedding Security into Every Layer

Unlike many other organizations where AI and cybersecurity are two separate domains, Gruve treats them as one. The company’s architecture reflects a belief that AI security must be pervasive and proactive.

Key pillars of Gruve’s approach include:

- AI-driven detection and response within all cybersecurity products.

- Zero trust architecture as a foundational design principle.

- Runtime AI security that continuously monitors behavior at scale.

- Collaborative architecture teams uniting AI engineers, data scientists, and cybersecurity experts.

In the times we are living in, when technology is evolving rapidly, we can’t treat security as an add-on. Today, it is the architecture and demands proactive measures to preempt challenges. Gruve’s leaders believe that building secure AI infrastructure from the start protects data and accelerates trust and innovation.

Conclusion

AI’s non-deterministic nature is rewriting the rules of cybersecurity. Enterprises must evolve from static protection models to dynamic, learning-based defenses. Security can no longer be reactive. Rather, it must be architectural.

Gruve’s collaboration between cybersecurity and solutions architecture teams demonstrates how leadership, foresight, and technical integration can make AI safer for everyone.

As AI continues to grow, the question is no longer whether it will change security, for it already has. The question is how quickly we can adapt.

Let’s Talk