News

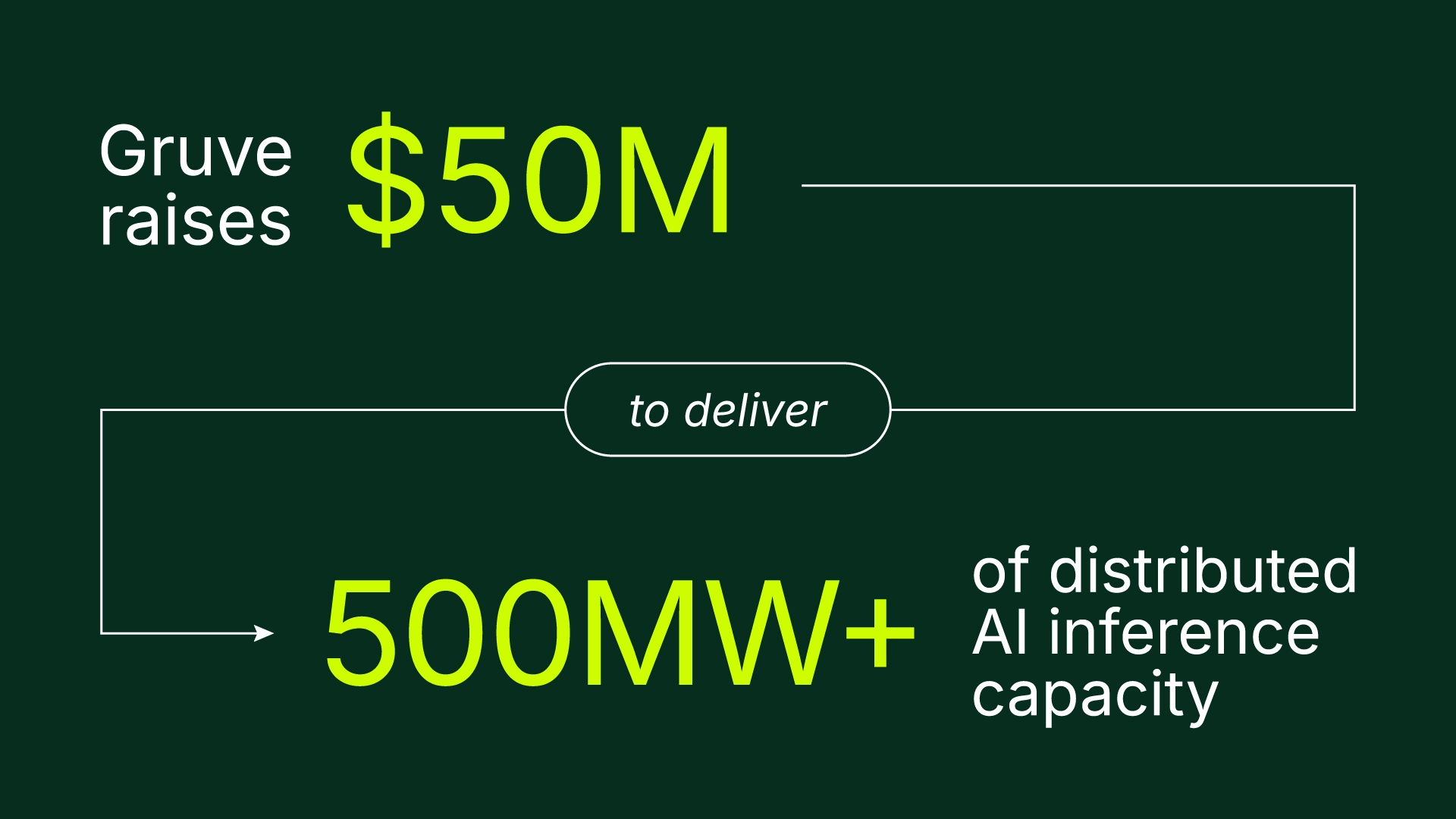

Speed to Scale: Gruve Unlocks 500MW+ of Distributed AI Inference Capacity, Raises $50M to Accelerate Deployment

- Gruve’s production-ready Inference Infrastructure Fabric delivers immediate capacity with industry-leading unit economics for scalable, profitable AI.

- 500MW+ of distributed inference capacity available across Tier 1 and Tier 2 U.S. cities—deployable in months, not years.

- Purpose-built for low-latency, high-throughput inference workloads as demand shifts from training to production.

Feburary 3, 2026 — [Redwood City, CA] — Gruve, a leader in AI services and infrastructure, today announced the availability of more than 500 megawatts of distributed AI inference capacity across the United States. The company also announced a $50 million follow-on Series A financing to accelerate deployments, expand strategic partnerships, and scale its full-stack agentic services.

The financing brings Gruve’s total funding to $87.5 million and was led by Xora Innovation (backed by Temasek), with participation from Mayfield, Cisco Investments, Acclimate Ventures, AI Space and other strategic investors.

The capital accelerates Gruve’s ability to make low-latency AI inference capacity immediately available across Tier 1 and Tier 2 U.S. cities and scale efficiently as demand grows, without multi-year data center buildouts.

The Execution Gap in AI

As inference becomes the dominant AI workload, infrastructure has emerged as the industry’s primary constraint. While models, agents, and hardware continue to see breakthroughs, the systems running them have not kept pace.

Most production inference today relies on infrastructure that was never designed for low-latency, high-throughput, cost-sensitive AI, resulting in unsustainable costs, mounting technical debt, and weak unit economics.

Gruve’s Inference Infrastructure Fabric was built to close this gap.

Inference Infrastructure Services Purpose-built for Production AI Workloads

Gruve’s Inference Infrastructure Fabric is a distributed platform engineered specifically for production-grade AI inference, delivering predictable latency, scalable throughput and industry leading economics.

Key capabilities include:

- 500MW+ of expandable U.S. capacity, leveraging excess power and existing infrastructure near Tier 1 and Tier 2 cities, enabled by long-term partnerships with Lineage, Inc. (NASDAQ:LINE) and other major colocation providers

- Modular, high-density, rack-scale inference capacity, engineered for cost efficiency in inference-heavy workloads and rapid deployment

- A distributed, low-latency edge fabric for seamless connectivity and workload orchestration across sites

- Full-stack operations, including a 24×7 AI-powered SOC, network services, and cluster management to meet enterprise-grade reliability and performance standards

Gruve is bringing 30MW live today across four U.S. sites, with additional capacity under development and further near-term expansions in Japan and Western Europe. This unique approach bypasses multi-year data center build cycles and delivers AI-ready capacity in months instead of years.

Built for Neoclouds, Enterprises, and AI-Native Startups

Gruve’s distributed inference infrastructure is designed for organizations moving from experimentation to production without compromising performance or economics, including:

- Neoclouds scaling inference economically at the edge

- Enterprises deploying real-time agents and mission-critical AI workloads

- AI-native startups moving from prototype to production

By placing inference close to users and data sources, Gruve reduces latency and operating costs, while improving reliability, unlocking true speed to scale for the next generation of AI applications.

Customer and Investor Validation

“Over the past decade, Lineage has made significant investments in resilient infrastructure as well as energy optimization and transition. We are now partnering with Gruve to strategically repurpose our excess power capacity to support next-generation AI applications while creating value for our shareholders.”

— Sudarsan Thattai, CTO/CIO, Lineage

“Gruve’s innovative modular compute for inferencing enables us to utilize stranded power capacity in our data center. Gruve’s high density AI Inference Infrastructure requires far less real estate footprint, which is a game changer in a highly space and power constrained Silicon Valley market.”

— Scott Brookshire, CTO/Co-Founder, OpenColo by American Cloud

“As AI shifts from training to inference, the industry faces a critical infrastructure gap. Models continue to advance rapidly, but the systems running them in production haven’t kept pace. Economics, latency, and operational rigor now determine whether AI can scale in the real world. What excites us is that Gruve is taking a fundamentally different approach to enterprise AI by building the infrastructure and services layer that makes production AI viable at enterprise scale. We’re excited to deepen our partnership with Tarun and the Gruve team as they help enterprises, neoclouds, and AI-native startups unlock AI’s true speed to scale.”

— Navin Chaddha, Managing Partner, Mayfield

“Gruve’s Inference Infrastructure Fabric combines modular state-of-the-art pods with a distributed network architecture to enable rapid capacity deployment in power- available locations today — without compromising on latency. As demand for inference accelerates, scalable, low-latency infrastructure with strong unit economics is increasingly critical, and Gruve is well position to meet that need as it scales in 2026.”

— Phil Inagaki, Managing Partner and Chief Investment Officer, Xora Innovation

“We’re launching our Inference Infrastructure with 30MW across four U.S. sites, immediate capacity available nationwide, and near-term expansions in Japan and Western Europe. Combined with our 24×7 AI-powered SOC, inference fabric and infrastructure operations, Gruve is ready to support customers at true production scale.”

— Tanuj Mohan, GM & SVP, AI Platform Services, Gruve

About Gruve

Gruve helps enterprises, neoclouds and AI startups build and operate infrastructure-to-agent AI solutions where technical performance and business ROI converge. Through service offerings spanning AI Infrastructure, Data Foundation, Inference Infrastructure Fabric, and AI Application Accelerator, Gruve enables scalable, secure, and measurable AI execution in production.

Learn more at www.gruve.ai.

Media Contact

Name: Ash Bao

E-mail: press@gruve.ai